In this post, we will see how to load

GTFS data into PostgreSQL. GTFS stands for General Transit Feed

Specification (originally, it was called Google Transit Feed

Specification) and allows public transit agencies to publish their

schedules on the web.

The task requires the following steps:

- Download the GTFS data for NYMTA

- Create a database schema for loading the GTFS data

- Load the data on a Postgresql/ PostGIS database

Step 1: Download the GTFS data for NYMTA

We will start with the transit feed for

NYMTA as an example. The first step is getting access to the feed.

This is available at the following site.

We can go ahead and download the data

on our machine. Next step is to import the data into a database,

which we will do in the next step.

Step 2: Create a database and user to load the data

My database of choice is Postgresql and PostGIS, so that is what I

used. The first step is to create the database and enable spatial

extension.

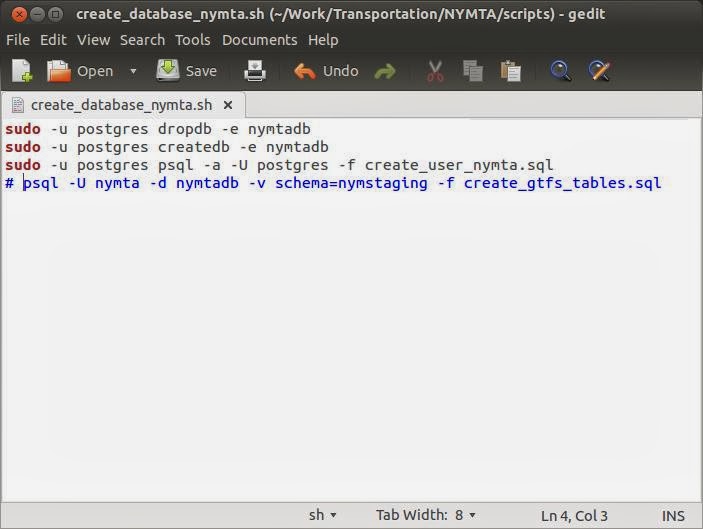

The first script drops a previously existing database, creates the

database and creates the user and schema.

create_database_nymta.sh

sudo -u postgres

dropdb -e nymtadb

sudo -u postgres

createdb -e nymtadb

sudo -u postgres

psql -a -U postgres -f create_user_nymta.sql

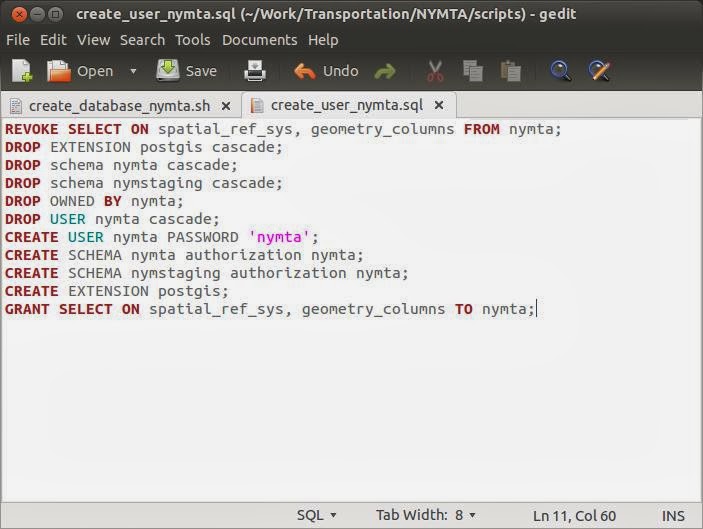

This script calls the following SQL script to create the user and

schemas.

create_user_nymta.sql

REVOKE SELECT ON

spatial_ref_sys, geometry_columns FROM nymta;

DROP EXTENSION

postgis;

DROP schema nymta

cascade;

DROP schema

nymstaging cascade;

DROP OWNED BY nymta;

DROP OWNED BY nymta;

DROP USER nymta;

CREATE USER nymta

PASSWORD 'nymta';

CREATE SCHEMA nymta

authorization nymta;

CREATE SCHEMA

nymstaging authorization nymta;

CREATE EXTENSION

postgis;

GRANT SELECT ON

spatial_ref_sys, geometry_columns TO nymta;

This is how the above script looks in my editor.

To run the script, I typed

$ ./create_database_nymta.sh

Executing the above scripts, we will get prompted for sudo password

once. Here is the console output from the execution on my machine,

for reference.

Step 3: Load GTFS data on RDBMS for easier handling

Next step is to load the GTFS data in

RDBMS for handling. First step is to create a target schema for

storing the data.

Here is my script for creating the

database schema for creating GTFS tables in my staging schema.

create_gtfs_tables.sql

DROP

TABLE GTFS_AGENCY;

DROP

TABLE GTFS_ROUTES;

DROP

TABLE GTFS_STOPS;

DROP

TABLE GTFS_TRIPS;

DROP

TABLE GTFS_STOP_TIMES;

DROP

TABLE GTFS_SHAPES;

DROP

TABLE GTFS_CALENDAR;

DROP

TABLE GTFS_CALENDAR_DATES;

DROP

TABLE GTFS_TRANSFERS;

/*

agency_id,agency_name,agency_url,agency_timezone,agency_lang,agency_phone

MTA

NYCT,MTA New York City Transit,

http://www.mta.info,America/New_York,en,718-330-1234

*/

CREATE

TABLE GTFS_AGENCY

(

agency_id

VARCHAR(10),

agency_name

VARCHAR(50),

agency_url

VARCHAR(100),

agency_timezone

VARCHAR(50),

agency_lang

VARCHAR(2),

agency_phone

VARCHAR(20)

);

/*

routes.txt

route_id,agency_id,route_short_name,route_long_name,route_desc,route_type,route_url,route_color,route_text_color

1,MTA

NYCT,1,Broadway - 7 Avenue Local,"Trains operate between 242 St

in the Bronx and South Ferry in Manhattan, most

times",1,http://www.mta.info/nyct/service/pdf/t1cur.pdf,EE352E,

*/

CREATE

TABLE GTFS_ROUTES

(

route_id

NUMERIC(5),

agency_id

NUMERIC(3),

route_short_name

VARCHAR(10),

route_long_name

VARCHAR(100),

route_desc

VARCHAR(100),

route_type

NUMERIC(3),

route_url

VARCHAR(100),

route_color

VARCHAR(8),

route_text_color

VARCHAR(8)

);

/*

stops.txt

stop_id,stop_code,stop_name,stop_desc,stop_lat,stop_lon,zone_id,stop_url,location_type,parent_station

101,,Van

Cortlandt Park - 242 St,,40.889248,-73.898583,,,1,

*/

CREATE

TABLE GTFS_STOPS

( stop_id

NUMERIC(10),

stop_code

VARCHAR(10),

stop_name

VARCHAR(100),

stop_desc

VARCHAR(100),

stop_lat

NUMERIC(38,8),

stop_lon

NUMERIC(38,8),

zone_id

NUMERIC(5),

stop_url

VARCHAR(100),

location_type

NUMERIC(2),

parent_station

NUMERIC(38)

);

/*

trips.txt

route_id,service_id,trip_id,trip_headsign,direction_id,block_id,shape_id

1,A20130803WKD,A20130803WKD_000800_1..S03R,SOUTH

FERRY,1,,1..S03R

*/

CREATE

TABLE GTFS_TRIPS

(

route_id

NUMERIC(10),

service_id

VARCHAR(10),

trip_id

NUMERIC(10),

trip_headsign

VARCHAR(10),

trip_short_name

VARCHAR(50),

direction_id

NUMERIC(2),

block_id

NUMERIC(10),

shape_id

NUMERIC(10)

);

/*

stop_times.txt

trip_id,arrival_time,departure_time,stop_id,stop_sequence,stop_headsign,pickup_type,drop_off_type,shape_dist_traveled

A20130803WKD_000800_1..S03R,00:08:00,00:08:00,101S,1,,0,0,

*/

CREATE

TABLE GTFS_STOP_TIMES

(

trip_id

NUMERIC(10),

arrival_time

TIMESTAMP,

departure_time

TIMESTAMP,

stop_id

NUMERIC(10),

stop_sequence

NUMERIC(10),

stop_headsign

VARCHAR(30),

pickup_type

VARCHAR(100),

drop_off_type

VARCHAR(100)

);

/*

shapes.txt

shape_id,shape_pt_lat,shape_pt_lon,shape_pt_sequence,shape_dist_traveled

4..N06R,40.668897,-73.932942,0,

*/

CREATE

TABLE GTFS_SHAPES

(

shape_id

NUMERIC(6),

shape_pt_lat

NUMERIC,

shape_pt_lon

NUMERIC,

shape_pt_sequence

NUMERIC(6),

shape_dist_traveled

NUMERIC

);

/*

calendar_dates.txt

service_id,date,exception_type

*/

CREATE

TABLE GTFS_CALENDAR_DATES

(

service_id

VARCHAR(10),

exception_date

DATE,

exception_type

VARCHAR(10)

);

/*

calendar.txt

service_id,monday,tuesday,wednesday,thursday,friday,saturday,sunday,start_date,end_date

A20130803WKD,1,1,1,1,1,0,0,20130803,20141231

*/

CREATE

TABLE GTFS_CALENDAR

(

service_id

VARCHAR(10),

monday

NUMERIC(1),

tuesday

NUMERIC(1),

wednesday

NUMERIC(1),

thursday

NUMERIC(1),

friday

NUMERIC(1),

saturday

NUMERIC(1),

sunday

NUMERIC(1),

start_date

DATE,

end_date

DATE

);

/*

transfers.txt

from_stop_id,to_stop_id,transfer_type,min_transfer_time

101,101,2,180

*/

CREATE

TABLE GTFS_TRANSFERS

(

from_stop_id

NUMERIC(10),

to_stop_id

NUMERIC(10),

transfer_type

NUMERIC(3),

min_transfer_time

NUMERIC(6)

);

To execute the script we need to run the following command on the terminal

$ psql -U nymta -d nymtadb -v schema=nymstaging -f create_gtfs_tables.sql

Executing the same, creates the neccessary tables on our schema.

Next step is to download some spatial data from public sources. We can download the Street centerline data for NYC from the following website.

Opening the data in desktop GIS, we can

see the amount of data available.

Next step, I wanted to do was to load

the GTFS data into the staging schema we just created. For that, we

will use GeoKettle which is a utility for loading data from text

files into PostgreSQL. This I will cover in my next post.

No comments:

Post a Comment